TESTING METHODOLOGY

The methodology of the P3 connect Mobile Benchmark is the result of P3’s many years of experience. It was carefully designed to evaluate and objectively compare the performance and service quality of Spain’s mobile networks from the users’ perspective.

The P3 connect Mobile Benchmark in Spain took place throughout October 2017. All samples were collected during the day, between 8.00 a.m. and 10.00 p.m. The network tests covered 17 large cities with more than 100,000 inhabitants. Measurements were also taken in smaller towns as well as on connecting roads and motorways. The combination of test areas was selected to provide significant test results covering a relevant part of the Spanish population. The areas chosen for the 2017 test account for more than 11.5 million people, or approximately 24.7 per cent of Spain‘s total population.

P3 conducted the tests with four drivetest cars, equipped with arrays of Samsung Galaxy S7 Cat 9 smartphones as well as a mixed allocation of Samsung Galaxy S7 and Sony Xperia XZ Cat 9 smartphones for simultaneous measurement of voice and data services.

Voice testing

Two smartphones per operator were used for voice evaluation in each car, resulting in a total of eight voice test devices per car. They set up test calls from car to car. The audio quality was evaluated based on the HD-voice capable and ITU standardised so-called POLQA wideband algorithm. All Spanish operators offer 4G capable subscriptions. All of the smartphones in the voice tests were set to 4G preferred mode. This reflects the common behaviour of customers. At the time of the 2017 P3 connect Mobile Benchmark in Spain, Orange was the only operator offering VoLTE. So, in this case, the phones would prefer this voice mode. However, in situations where 4G but no VoLTE would be available, the smartphones would have to switch (“fall back”) to 2G or 3G for the voice calls (so called “circuit-switched fall back” or CSFB). In the networks of Movistar, Vodafone, and Yoigo this was the standard behaviour.

In order to further reflect typical smartphone usage scenarios during the voice tests, background data traffic was generated in a controlled way through random injection of small amounts of HTTP traffic. The voice test scores account for 38.8 per cent of the total benchmark results.

Data testing

Data performance was measured using one smartphone per operator per car. Two of the drivetest cars were equipped with four Samsung Galaxy S7 each while the other two were carrying four Sony Xperia XZ each. This setup was chosen in order to respect the variable data performance of different smartphones in different networks. In total, the drivetest cars carried 16 devices for the data tests. For all data test devices, the radio access technology was set to LTE preferred mode.

The web tests accessed web pages according to the widely recognized Alexa ranking. In addition, the static “Kepler” test web page as specified by ETSI (European Telecommunications Standards Institute) was used. In order to test the data service performance, files of 3MB and 1MB for download and up- load respectively were transferred from or to a test server located on the Internet. In addition, the peak data performance was tested in uplink and downlink directions by assessing the amount of data that was transferred within a seven-seconds time period. Another discipline was the playback of YouTube videos. It took into account that YouTube dynamically adapts the video resolution to the available bandwidth. So, in addition to success ratios, start times and playouts without interruptions, YouTube measurements also determined the average video resolution.

All tests were conducted with the best- performing mobile plan available from each operator. Data scores account for 58.2 per cent of the total results.

Routes and samples

The test routes are shown on page 1 of this report. In the big cities and smaller towns indicated, the cars had to follow predefined routes. Altogether, the four test cars covered more than 11,520 kilometres, of which approximately 4040 led through the big cities and smaller towns, while 7480 kilometres were covered on connecting roads.

Performance indicators and rating

The score weighting reflects both the geographical distribution of the Spanish population and the ranking of usage scenarios. Therefore, 582 of the total of 1000 maximum points were assigned to the cities – 233 maximum points refer to the voice results and 349 maximum points reflect the data results. For the towns and the roads, a maximum of 194 points each is available. In both categories, the possible maximum is 78 points in the voice, and 116 points in the data category. The table on pages 2 and 12 of this report shows the percentage of maximum points that each operator has achieved in each discipline.

Crowdsourcing operational excellence

The remaining 30 points are awarded for operational excellence. For this survey, P3 uses a crowdsourcing method. To acquire these data, P3 considers connectivity reports that are gathered by background diagnosis processes included in a number of popular smartphone apps. While the customer uses one of these apps, a diagnosis report is generated daily and is evaluated per hour. As such reports only contain information about the current network availability, it generates just a small number of bytes per message and does not include any personal user data.

In order to differentiate network glitches from normal variations in network coverage, we apply a precise definition of “service degradation”: A degradation is an event where data connectivity is impacted by a number of cases that significantly exceeds the expectation level. To judge whether an hour of interest is an hour with degraded service, the algorithm looks at a sliding window of 168 hours before the hour of interest. This ensures that we only consider actual network service degradations in contrast to a simple loss of network coverage of the respective smartphone due to prolonged indoor stays or similar reasons.

In order to ensure the statistical relevance of this approach, a valid assessment month must fulfil clearly designated prerequisites: A valid assessment hour consists of a predefined number of samples per hour and per operator. The exact number depends on factors like market size and number of operators. A valid assessment month must be comprised of at least 90 per cent of valid assessment hours (again per month and per operator). Degradations observed in the night hours between 0.00 a.m. and 6.00 a.m. are not accounted for.

Sophisticated scoring model for operational excellence

The relevant KPIs are then based on the number of days when degradations occurred as well as the total count of hours affected by service degradations. In the scoring model that we apply to the gathered crowdsourcing data, 60 per cent of the available points (in this case a maximum of 18) will consider the number of days affected by service degradations – thus representing the larger-scale network availability. An additional 40 per cent of the total score (here 12 points) is derived from the total count of hours affected by degradations, thus representing a finer-grained measurement of operational excellence.

Each considered month is then represented by a maximum of ten achievable points. The maximum of six points (60 per cent) for the number of affected days is diminished by one point for each day affected by a service degradation. One affected day will cost one point and so on until six affected days out of a month will reduce this part of a score to zero.

The remaining four points are awarded based on the total number of hours affected by degradations. Here, we apply increments of six hours: Six hours with degradations will cost one point, twelve hours will cost two points and so on, until a total number of 24 affected hours will lead to zero points in this part of the score.

Three boxes were mounted into the back and side windows of each measuring car in order to support twelve smartphones per car.

Each box was housing four smartphones which allowed the simultaneous testing of four mobile operators.

Hakan Ekmen, managing director of P3 communications GmbH and Bernd Theiss, Head of connect’s test lab, inspect the testing equipment.

ConclusioN

Three out of four Spanish operators improved over last year’s results. The advancements of the smallest contender, Yoigo, are especially impressive.

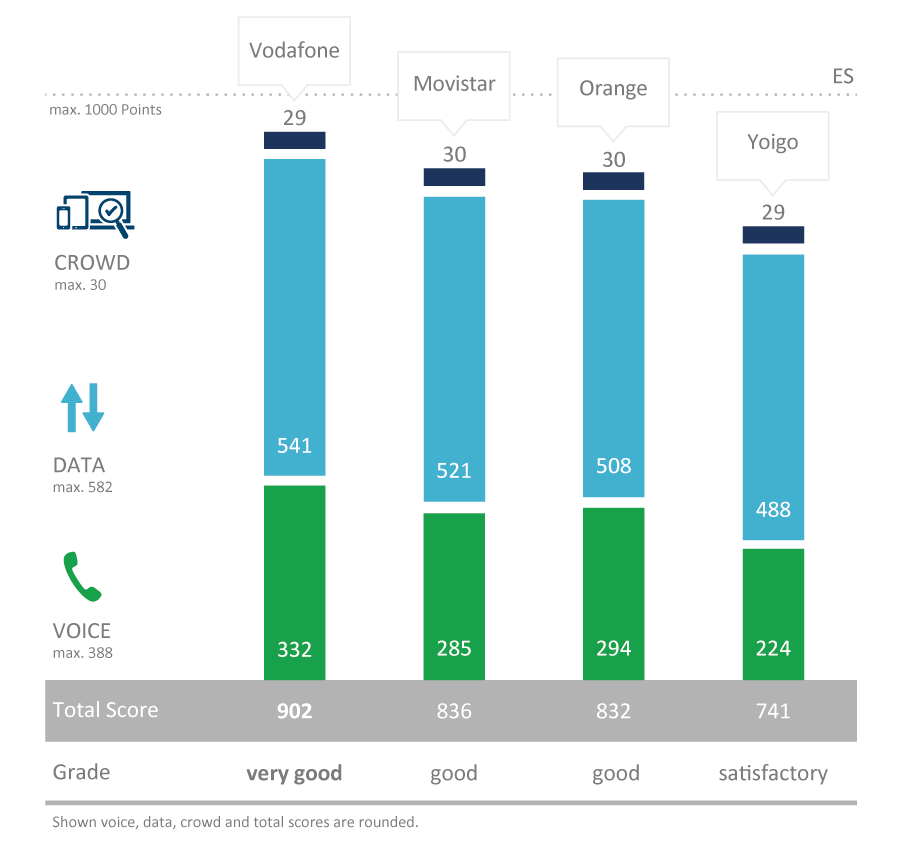

For the third time in a row, Vodafone is the clear winner of the P3 connect Mobile Benchmark in Spain. This may not come as a surprise, but one should bear in mind that it takes a lot of effort to secure the top position.

While also Yoigo and Orange show considerable improvements in their scores, Movistar remained essentially at the same level. Telefónica’s mobile network scores quite well in the data discipline, but it falls behind its competitor Orange in the voice tests. All in all, this is a good result for Spain‘s largest operator, with Movistar well deserving the second rank.

Orange also improves over 2016‘s results and while still scoring third, it narrows the gap to Movistar to only four points. In the voice discipline, Orange delivers short call setup times and good speech quality thanks to its introduction of VoLTE. Currently, Orange is the only Spanish operator which offers this modern technology to its customers.

Yoigo improves by more than 100 points

Yoigo makes the biggest jump ahead. Even when taking the changes to the maximum available points entailed by our new crowd score into account, the smallest contender improved its score by more than 100 points. This is a remarkable accomplishment and good news for Yoigo‘s customers. Above that, our new crowdsourced operational excellence score also delivers enjoyable results, confirming a high level of stability and availability of all Spanish networks in the observation period.

As in the two previous years, Vodafone once more is the overall winner in Spain thanks to excellent voice and data results. Vodafone takes a clear lead in both categories and also shows a compelling level of operational excellence. The lead over its pursuers Movistar and Orange is even more distinct than in 2016.

Compared to the previous year, Movistar has improved in the data category, but hast lost some points in the voice discipline. In our new operational excellence evaluation, the Telefónica brand reaches the full possible score of 30 points. In the future, Movistar will have to improve in the voice category if it wants to stay ahead of Orange.

France Telecom’s Spanish brand comes in third, with a close distance to its constant rival Movistar. In the voice discipline in big cities, Orange already has managed to outrank the Telefónica network – and in the other categories, it is following at close distance. Also, our crowd score hast attested a high level of operational excellence.

Yoigo shows the biggest improvement in comparison to our 2016 benchmark, which it mainly achieves in the data category. Improving from last year’s grade “sufficient“ to this year’s “satisfactory“ is a big step. Also, Yoigo scores high in our new operational excellence discipline. All of this is really good news to its customers.